Live Launch of the Open-Source MLSysOps Framework!

🗓 Recorded on: Fri, Jul 18th, 2025

🕒 Time: 13:00 CET

📍 Where: Online (Zoom)

In this hands-on session, we introduced the MLSysOps Open-Source Framework following its June 2025 launch.

What you will learn:

- Why we built the MLSysOps framework and the challenges it addresses

- How to set up a testbed from scratch using our provided scripts

- Step-by-step system deployment and execution of a real-world example

- A demo of our Policy API and a sneak peek at ML integration

- A look ahead: what’s next for MLSysOps and how you can get involved

Whether you’re running workloads at the edge, in the cloud, or anywhere in between, MLSysOps brings smart automation and control where you need it.

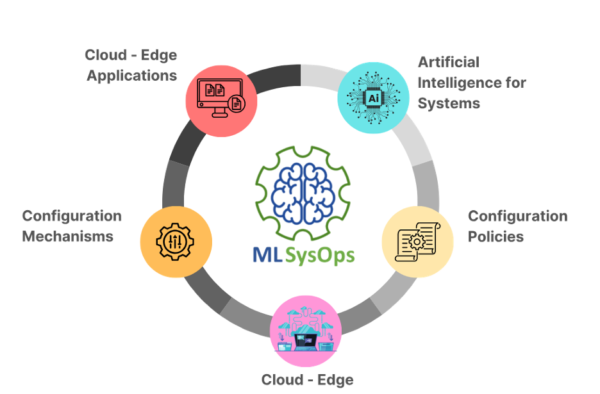

The MLSysOps Framework

The MLSysOps Framework, developed as the open-source outcome of the MLSysOps EU Project, provides an extensible and modular platform (licensed under Apache-2.0) for autonomic, explainable, and adaptive system management across the heterogeneous computing continuum—from centralized cloud infrastructures to resource-constrained far-edge devices.

Designed to reduce human monitoring and manual configuration, the framework leverages AI-powered decision-making and machine learning models to dynamically optimize resource utilization, application deployment, and system performance in real time.

Architecture & Core Principles

The design of the framework is grounded in a hierarchical, agent-based architecture driven by the MAPE-K loop (Monitor, Analyze, Plan, Execute – Knowledge) paradigm. It introduces the concept of a system slice—a logical grouping of computing, storage, and networking resources across the continuum—managed as a self-contained unit through an instance of the MLSysOps control plane.

Key architectural components include:

- Node Agents for edge and far-edge control aspects

- Cluster Agents to manage resource domains comprising multiple nodes

- A global Continuum Agent to coordinate cross-domain decisions within a given system slice

- Flexible and dynamic ML model integration, enabling the addition and invocation of explainable, continually trained, and modular intelligence

- Northbound/Southbound interfaces for the interaction with external clients and interoperability with external systems and orchestrators (e.g., Kubernetes)

- A Command Line Interface (CLI) that connects to the Northbound API of a given system slice and supports the basic interactions through which one can deploy and monitor the execution of applications

The framework operates as an abstraction middleware, managing diverse infrastructure layers and supporting data-driven autonomy.

Key Features

- Kubernetes-native Deployment using Custom Resource Definitions (CRDs)

- Multi-cluster orchestration powered by Karmada

- Dynamic telemetry and observability layer, easily extendable

- Plugin systems to support configuration policies and custom mechanisms

- Northbound REST API service for external applications or CLI use

- ML Connector Service for seamless model deployment, retraining, and explainability integration

- Support for far-edge devices and multiple container runtimes

- System inventory and application targeting via CRDs

- Built-in storage and resource management across the continuum

Use Cases

- Autonomic application deployment with ML-driven optimization

- Adaptive system configuration based on workload and context-aware policies

- Policy-based orchestration to meet application and system-level SLAs

- Explainable AI integration for transparent system decisions

- Resource-aware deployment for IoT and edge environments with constrained hardware

Explore the Code / Get Started

GitHub Repository: github.com/mlsysops-eu/mlsysops-framework